Do AI agents disrupt flow state?

Fringe Legal is back. After a stretch of inconsistent posting, I’m committing to a weekly cadence. Expect an email every Tuesday with one idea, a brief note on something I’ve been thinking about, and three finds.

Welcome to Epoch 2: Wave 1.

(For those wondering about “Epoch”: it’s a nod to how machine learning models train. Each epoch marks a full pass and a new chance to learn. This is mine.)

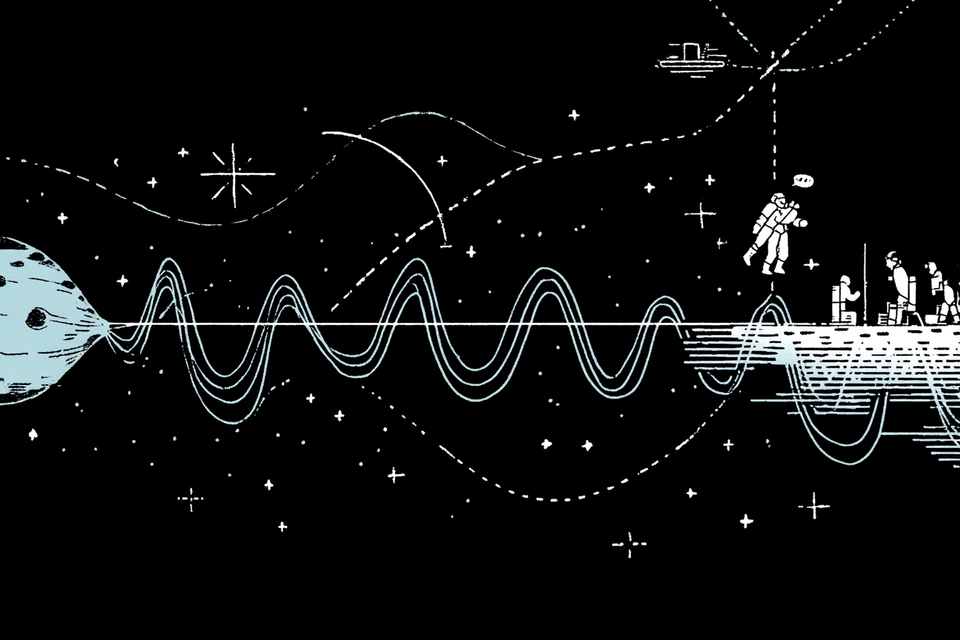

Do AI agents disrupt flow state?

Many AI tools are designed to boost productivity. That’s not inherently bad, but it’s worth asking whether these tools make it harder to enter a flow state or to do deep work.

At my first startup, one of the founders (who was at the time a senior associate at a large law firm), explained why lawyers loved having tools built directly into Word. It wasn’t about convenience. It was about maintaining flow. For some people, it takes 15–20 minutes or more to get there. Once they’re in it, drafting and creating, they produce their best work. Time passes effortlessly. You’re simply in the zone. Interruptions like switching applications can break that state.

Research confirms the benefits of flow. But how does using AI tools, which I use frequently for coding, editing, and as a thought partner, affect our ability to reach that state? Instead of typing, writing, or creating directly, you’re having a conversation. You give instructions to an agent, then wait for output. In that downtime, you might spin up another agent or another tab for an adjacent task. Your throughput skyrockets because you can do so much more. But how much of that qualifies as deep work?

The question for me is: if we measure success primarily by increased productivity, the charts may go up and to the right. But how much of that “productive time” was spent on critical thinking or deep work?

Finds

Boring is good //

There’s plenty I disagree with in this post by Scott Jenson, but there are also good points. Three I highlighted:

- “People are rushing too quickly into hyped technology, not understanding how to best use the tech… I’m playing with today’s models to figure out how they fall over, trying to find how they can be useful.”

- “You write to understand, which usually means writing a ton of awful text that must then be ruthlessly thrown away. Trying to ‘write automatically’ using LLMs completely circumvents this pain. Just like James T. Kirk, we need our pain! … But I don’t ask them to do any of the writing. I need my pain.”

- “We’re here to solve problems, not look cool.”

SKILLS 2026 //

This is always a great event, and the deadline to vote on the agenda is this week (Oct 24). You can shape the event and even participate in in-person watch parties. I’m down for a watch party in LA.

Lessons from Science Fiction

“What is the role of human scientists in an age when frontiers of scientific inquiry have moved beyond the comprehension of humans?”

This line opens Ted Chiang’s short story “The Evolution of Human Science.” I love turning to science fiction for questions we might soon face in reality. AI recently helped discover a possible cancer-therapy pathway.

Until next time.

Become a Fringe Legal member

Sign in or become a Fringe Legal member to read and leave comments.

Just enter your email below to get a log in link.