State of AI 2025

OpenRouter (an AI API aggregator that provides developers with a single, unified interface to access hundreds of different large language models) released its State of AI report. It's a good source for understanding how people use large language models at scale. It covers more than one hundred trillion (which, while a large number, is a small subset of usage across providers) tokens across hundreds of models and dozens of providers.

Below is a structured breakdown of the findings, along with insights from external sources that help pressure test the data.

(Note: as I finished writing this post, OpenAI released its first state of enterprise AI report, which might also be relevant to this audience.)

Want to read it as a presentation, see below. Access here if the link doesn't display well.

1. What the dataset captures

OpenRouter analyzed real usage patterns across two years of activity, focused on metadata. Categories were assigned using a small sample and then mapped to higher-level themes such as programming, roleplay, education, and productivity.

It is the closest thing I have seen to a live map of LLM behaviour across a suite of models.

2. Read the data with the right caveats

Important to note that OpenRouter skews toward developers, indie teams, and builders who want access to many models through a single API. Large banks, hospitals, and law firms often use private deployments, so they do not appear here. I recommend pairing this report with OpenAI's State of Enterprise AI 2025.

Small models also look underused because many teams now run them locally. Geography is inferred through billing, which introduces noise.

Treat this dataset as a strong signal for how builders and early adopters behave.

Not a complete view of all industries.

3. A multi model, multi purpose, reasoning heavy ecosystem

- No one model wins all work. Closed models dominate high value tasks. Open models dominate high volume tasks.

- Programming and roleplay account for a large share of all tokens.

- Reasoning and agentic workflows are growing rapidly and now account for most traffic.

- Chinese open source models are surging.

- Retention patterns show that early cohorts decide which models become sticky.

4. Closed vs open source: different roles, both growing

Closed models

- Closed models sit in the high-cost, high-value bracket.

- Users pay for reliability, compliance and consistent reasoning quality. Price sensitivity is limited. A ten percent price cut barely shifts usage. Once a model becomes part of a critical workflow, switching is painful (as prompts/evals need to be rewritten/retested).

Open source

- Open models now carry close to a third of all traffic on OpenRouter.

- They win in high volume, cost sensitive tasks. The growth of Chinese models such as DeepSeek, Qwen and Kimi is substantial. Many are used for production grade workloads, not just experiments.

Model size

- Medium sized models in the 15B to 70B range are the new workhorses.

- Small models shift to local environments. Large models stay reserved for complex analysis and design tasks.

This division suggests a stable two tier market: premium closed systems for high stakes tasks and flexible open systems for everything else.

5. What people really use LLMs for

Programming

Programming grows into the dominant productivity workload.

Token share rises from roughly 11 percent to more than half of all usage.

Developers use AI to write code, debug, understand codebases and generate scripts. This is real work, not novelty.

Roleplay

Roleplay dominates open source usage.

More than half of all open source tokens fall into this category. Games, characters, companions and creative writing drive heavy demand. Many of these use cases require flexible content controls, which makes open models attractive.

Vertical industries

Legal, finance and healthcare appear, but in small volumes.

They sit in the low cost, low volume cluster.

These categories have potential but remain early.

This split highlights an uncomfortable truth. AI usage is shaped by developers and consumers, not by regulated industries.

6. Reasoning and agents move into the mainstream

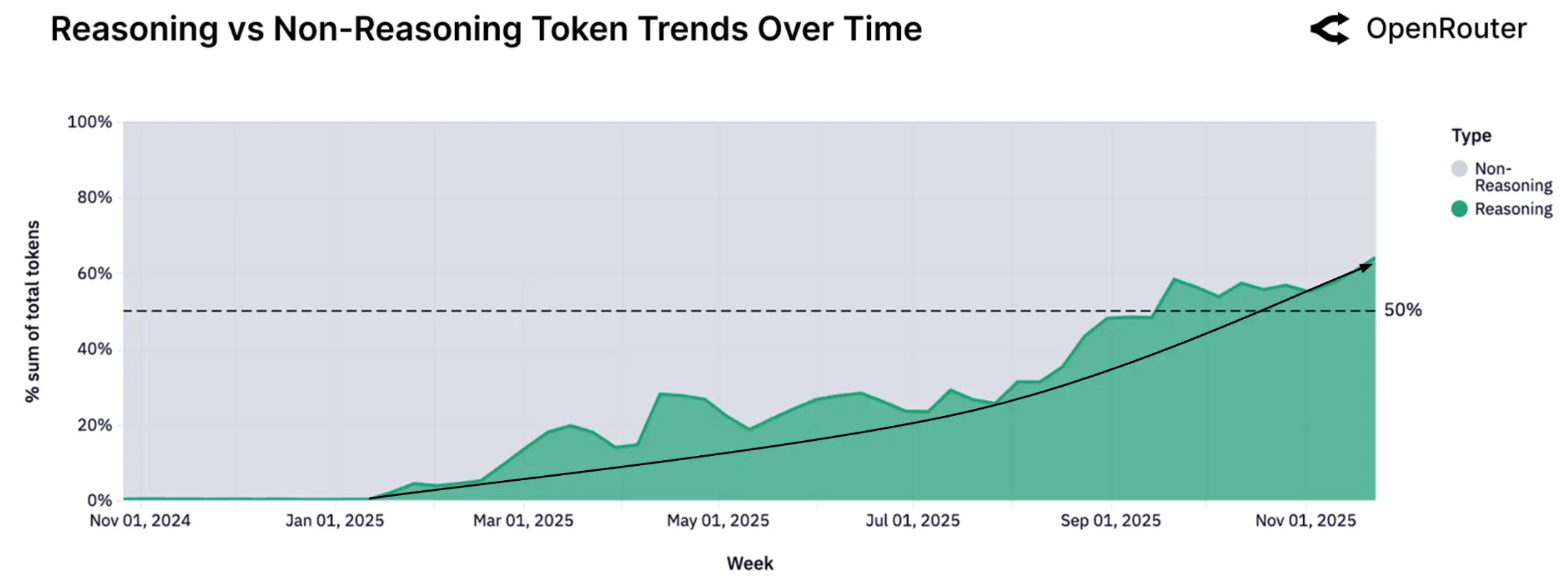

The release of OpenAI’s o1 model in late 2024 marks a turning point.

Reasoning focused models now take more than half of all tokens.

Prompt lengths grow. Completion lengths grow.

Developers rely on models that plan, call tools and take multi step actions.

The competitive edge is shifting from raw accuracy to reliable orchestration.

The next frontier is control, consistency and the ability to complete multi stage tasks.

7. AI is global, but English still dominates

North America holds less than half of all spend. Asia rises fast. Europe remains significant. The United States leads by country. Singapore appears unusually high, mostly due to routing behaviour from China.

English accounts for more than eighty percent of tokens.

Simplified Chinese ranks second, with strong growth driven by local model adoption.

This shows increasing globalisation, but also highlights a clear opportunity. High quality multilingual experiences remain underserved.

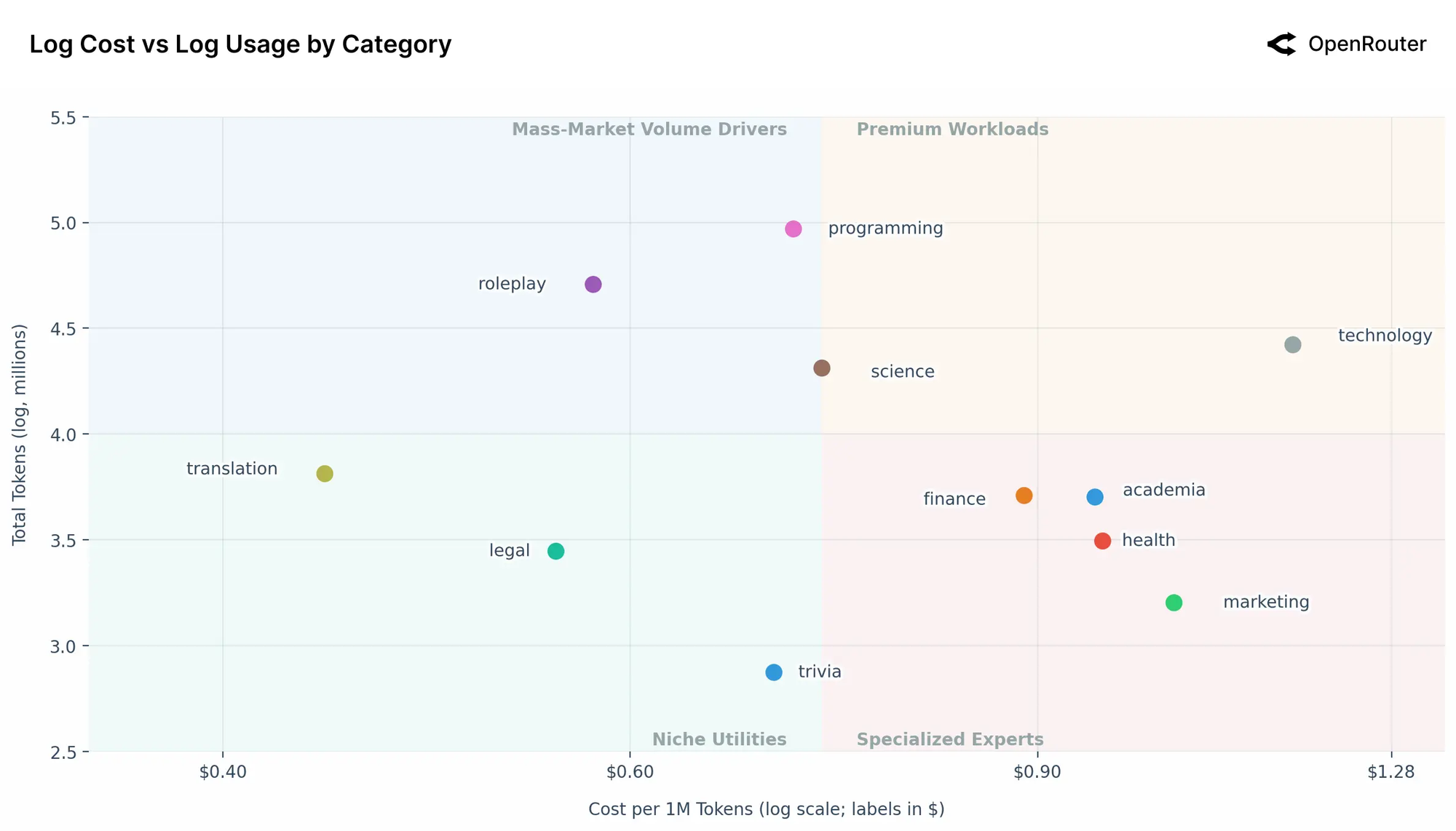

8. Cost vs usage: four segments of work

Costs and usage form four clear quadrants.

Premium

High cost and high usage.

Advanced technical reasoning and science tasks sit here.

Mass market

High usage at low or medium cost.

Programming and roleplay dominate this zone and define most of the total volume.

Specialized

High cost and low volume.

Finance, marketing and health fall here.

Users pay more for precision, but use these models less frequently.

Utility

Low cost and low volume.

Translation, legal and trivia.

Stable, low margin tasks.

9. Retention and the Glass Slipper effect

The most valuable insight in the report was how retention behaves. Retention shows whether a model becomes part of someone’s real workflow or is only tested once and forgotten.

Most models follow a predictable pattern. Users show up, run a few calls, and leave. This is normal. Curiosity drives the first interaction. Very few users return unless the model delivers something meaningful.

A small number of models break this pattern. Open Router call this the Glass Slipper, a retention pattern where a model immediately gains a small group of users who keep using it long term because it enables a capability they could not achieve before.

What the Glass Slipper effect captures

- A model launches.

- A specific group of users tries it.

- For some of them, the model enables a capability they did not have before.

- Those users adopt it deeply and continue using it long term.

Several models demonstrate this pattern.

- Gemini 2.5 Pro retains a significant portion of its launch cohort for many months, while later cohorts drop off quickly.

- Claude 4 Sonnet shows a similar signature, with early adopters maintaining high retention long after the initial spike.

- GPT 4o Mini displays the sharpest contrast. One early cohort remains active at high levels while all subsequent cohorts churn rapidly.

These cohorts form only when the model delivers a clear capability improvement and a real fit.

10. What the report means for builders, innovation teams and legal professionals

1. You must design for multi model workflows

Different tasks need different models.

Closed systems excel at complex reasoning, compliance and legal grade reliability.

Open systems excel at rapid iteration, cost efficiency and flexible behaviour.

A modern AI stack needs both.

2. Agents change how products work

Single prompt interactions are fading.

The future belongs to structured flows where the model plans, reasons, retrieves, calls tools and manages steps. 2026 will continue to be the year of agents.

If you are building new legal workflows, agentic patterns should be your baseline.

3. Depth matters more than breadth

Retention tells you when a model or feature truly solves a problem.

Legal innovation teams should track which users adopt a capability and stay with it over time.

This helps avoid pilot fatigue and directs investment toward tools that deliver durable value.

Until next time.

Become a Fringe Legal member

Sign in or become a Fringe Legal member to read and leave comments.

Just enter your email below to get a log in link.